SIG, The Humanoid

JST, ERATO, Kitano Symbiotic Systems Project (last update: 2002/10/25)

Since 2003, SIG project is transfered to Kyoto

University.

Overview

Humanoid SIG is designed

as a testbed of integration of perceptual information to control

motor of high degree of freedom (DOF).

- 4 DOFs of body driven by 4 DC motors

-

Each DC motor is controlled by a potentiometer.

- A pair of CCD cameras of Sony EVI-G20 for visual stereo input

- Each camera has 3 DOFs, that is, pan, tilt and zoom. Focus is automatically

adjusted. The offset of camera position can be obtained from each camera.

- Two pairs of nondirectional microphones of Sony electret condenser microphone ECM-77S.

- One pair of microphones are installed at ear position of the head to gather

sounds from external world. The other pair of microphones are installed very

close to the corresponding microphone to gather sounds from internal world.

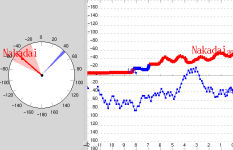

(see Active Audition; JPEG; 450Kbytes)

- A cover of the body

-

It reduces sounds to be emitted to external environments,

which is expected to reduce the complexity of sound processing.

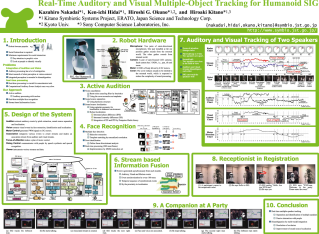

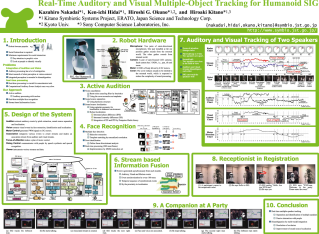

Real-Time Auditory and Visual Multiple-Object Tracking

We develop a real-time auditory and visual tracking system of

multiple objects for humanoid under real-world environments.

Real-time processing is crucial for sensorimotor tasks in tracking,

and multiple-object tracking is crucial for real-world applications.

Multiple sound source tracking needs perception of a mixture

of sounds and cancellation of motor noises caused by

body movements. However its real-time processing has

not been reported yet. Real-time tracking is attained by fusing

information obtained by sound source localization, multiple face

recognition, speaker tracking, focus of attention

control, and motor control. Auditory streams with sound source

direction are extracted by active audition system with motor noise

cancellation capability from 48 KHz sampling sounds. Visual streams

with face ID and 3D-position are extracted by combining skin-color

extraction, correlation-based matching, and multiple-scale image

generation from a single camera. These auditory and visual streams

are associated by comparing the spatial location, and associated

streams are used to control focus of attention. Auditory, visual, and

association processing are performed asynchronously on different PC's

connected by TCP/IP network. The resulting system implemented on an

upper-torso humanoid can track multiple objects with the delay of

200 msec, which is forced by visual tracking and network latency.

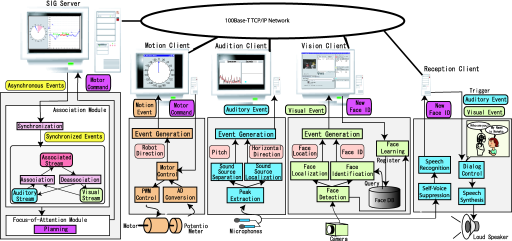

System architecture

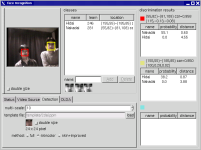

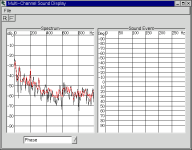

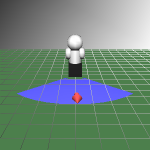

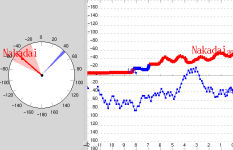

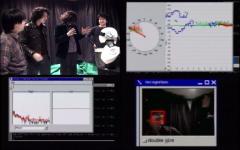

Screenshots of Modules

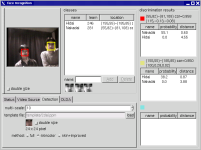

Vision module

|

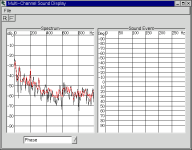

Audition module

|

Motor module

|

Viewer module

|

Video

Demo movie (MPEG; 46.2Mbytes) consists of

- Introduction of SIG, and

- Demonstration of real-time human-humanoid interaction.

A receptionist in registration

|

A companion at a party

|

SIG Hardware

SIG Software

SIG demonstrations

Posters

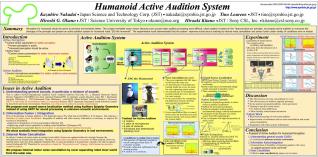

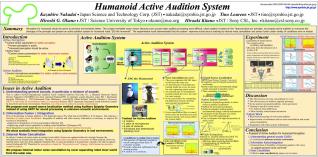

Humanoid Active Audition System - Humanoids 2000: (JPEG; 450Kbytes)

Real-Time Auditory and Visual Multiple-Object Tracking for Humanoid SIG - AAAI 2001 Robot Exhibition: (PNG; 598Kbytes)

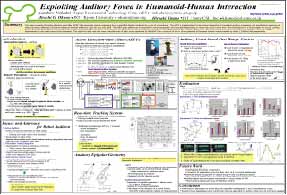

Exploiting Auditory Fovea in Humanoid-Human Interaction - AAAI 2002 Technical Poster Session: (JPEG; 565Kbytes)

Publications

- Kazuhiro Nakadai, Ken-ichi Hidai, Hiroshi Mizoguchi, Hiroshi G. Okuno, Hiroaki Kitano:

Real-Time Auditory and Visual Multiple-Speaker Tracking for Human-Robot Interaction, Journal of Robotics and Mechatronics, pp. 479-489, JSME, Oct, 2002.

- Hiroshi G. Okuno, Kazuhiro Nakadai, Ken'ichi Hidai, Hiroshi Mizoguchi, Hiroaki Kitano: Human-Robot Non-Verbal Interaction Empowered by Real-Time Auditory and Visual Multiple-Talker Tracking, Advanced Robotics, in print, Robotics Society of Japan, 2002.

- Kazuhiro Nakadai, Hiroshi G. Okuno, Hiroaki Kitano:

Auditory Fovea Based Speech Separation and Its Application to Dialog System

Proc. of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-2002), pp.1314-1319, IEEE, Lausanne, Swiss, Oct. 2002.

- Kazuhiro Nakadai, Hiroshi G. Okuno, Hiroaki Kitano:

Auditory Fovea Based Speech Enhancement and Its Application to Human-Robot Dialog System

Proc. of 7th International Conference on Spoken Language Processing (ICSLP-2002), pp.1817-1820, Denver, USA, Sep. 2002.

- Hiroshi G. Okuno, Kazuhiro Nakadai, Hiroaki Kitano:

Real-time Sound Source Localization and Separation for Robot Audition

Proc. of 7th International Conference on Spoken Language Processing (ICSLP-2002), pp.193-196, Denver, USA, Sep. 2002.

- Hiroshi G. Okuno, Kazuhiro Nakadai, Hiroaki Kitano:

Realizing Audio-Visually triggered ELIZA-like non-verbal Behaviors.

Seventh Pacific Rim International Conference on Artificial Intelligence(PRICAI-2002), pp.552-562, Tokyo(NII), Japan, Aug. 2002.

- Kazuhiro Nakadai, Hiroshi G. Okuno, Hiroaki Kitano:

Exploiting Auditory Fovea in Humanoid-Human Interaction

Proc. of the Eighteenth National Conference on Artificial Intelligence (AAAI-2002), pp.431-438, Edmonton, Canada, Aug. 2002.

- Hiroshi G. Okuno, Kazuhiro Nakadai, Hiroaki Kitano:

Non-verbal ELIZA-like Human Behaviors in Human-Robot interaction through Real-Time AUditory and Visual Multiple-Talker Tracking

The Third International Cognitive Robotics Workshop (CogRob2002), pp.59-65, Edmonton, Canada, Jul. 2002.

- Hiroshi G. Okuno, Kazuhiro Nakadai, Hiroaki Kitano:

Social Interaction of Humanoid Robot Based on Audio-Visual Tracking

Proc. of 18th International Conference on Industrial and Engineering Applications of Artificial Intelligence and Expert Systems (IEA/AIE-2002),

Lecture Notes in Artificial Intelligence, Springer-Verlag.

Cairns, Australia, June 2002.

- Kazuhiro Nakadai, Ken-ichi Hidai, Hiroshi G. Okuno, Hiroaki Kitano:

Real-Time Speaker Localization and Speech Separation by Audio-Visual Integration

Proc. of IEEE International Conference on Robotics and Automation (ICRA 2002), pp.1043-1049, Washington D.C., May. 2002.

- Hiroshi G. Okuno, Kazuhiro Nakadai, Lourens, T., Hiroaki Kitano: Sound and Visual Tracking by Active Audition, Q. Jin, J. Li, N. Zhang, J. Cheng, C.Yu, S. Noguchi (Eds.) Enabling Society with Information Technology, pp.174-185, Springer-Verlag, Tokyo, Jan. 2002.

- Kazuhiro Nakadai, Ken-ichi Hidai, Hiroshi G. Okuno, Hiroaki Kitano:

Real-Time Active Human Tracking by Hierarchical Integration of Audition and Vision

Proc. of Second IEEE-RAS International Conference on Humanoid Robots (Humanoids2001), pp.91-98, Tokyo, Nov. 2001.

- Kazuhiro Nakadai, Ken-ichi Hidai, Hiroshi G. Okuno, Hiroaki Kitano:

Epipolar Geometry Based Sound Localization and Extraction for Humanoid Audition

Proc. of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-2001), pp.1395-1401, IEEE, Maui, Hawaii, Oct. 2001.

- Hiroshi G. Okuno, Kazuhiro Nakadai, Tino Lourens, Hiroaki Kitano:

Human-Robot Interaction Through Real-Time Auditory and Visual Multiple-Talker Tracking

Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-2001), pp.1402-1409, IEEE, Maui, Hawaii, Oct. 2001.

- Hiroshi G. Okuno, Kazuhiro Nakadai, Tino Lourens, Hiroaki Kitano:

Separating three simultaneous speeches with two microphones by

integrating auditory and visual processing.

Proc. of European Conference on Speech Processing(Eurospeech 2001), pp.2643-2646, Aalborg, Denmark, Sep. 2001.

- Kazuhiro Nakadai, Ken-ichi Hidai, Hiroshi Mizoguchi, Hiroshi G. Okuno, Hiroaki Kitano:

Real-Time Multiple Speaker Tracking by Multi-Modal Integration for Mobi

le Robots

Proc. of European Conference on Speech Processing(Eurospeech 2001), pp.1193-1196, Aalborg, Denmark, Sep. 2001.

- Kazuhiro Nakadai, Ken-ichi Hidai, Hiroshi Mizoguchi, Hiroshi G. Okuno, Hiroaki Kitano:

Real-Time Auditory and Visual Multiple-Object Tracking for Robots.

Proc. of 17th International Joint Conference on Artificial Intelligence (IJCAI-01), 1425-1432, Seattle, Aug. 2001.

- Lourens, T., Kazuhiro Nakadai, Hiroshi G. Okuno, Hiroaki Kitano: Detection of Oriented Repetitive Alternating Patterns in Color Images --- A Computational Model of Monkey Grating Cells.

Proc. of Sixth International Work-Conference on Artificial and Natural Neural Networks (IWANN2001), Lecture Notes in Artificial Intelligence, No.2084, 95-107, Springer-Verlag. Granada, Spain, June 2001.

- Hiroshi G. Okuno, Kazuhiro Nakadai, Lourens, T., Hiroaki Kitano:

Sound and Visual Tracking for Humanoid Robot.

Proc. of 17th International Conference on Industrial and Engineering Applications of Artificial Intelligence and Expert Systems (IEA/AIE-2001),

Lecture Notes in Artificial Intelligence, No. 2070, Springer-Verlag.

Budapest, Hungary, June 2001.

-

Ian Frank, Kumiko Ishii-Tanaka, Hiroshi G. Okuno, Jun-ichi Akita, Yukiko Nakagawa, K. Maeda, Kazuhiro Nakadai, and Hiroaki Kitano:

And The Fans are Going Wild! SIG plus MIKE.

RoboCup 2000: Robot Soccer World Cup IV,

Lecture Notes in Artificial Intelligence No.2019, 139-148,

Springer-Verlag, May 2001.

- Kazuhiro Nakadai, Ken-ichi Hidai, Hiroshi Mizoguchi, Hiroshi G. Okuno, Hiroaki Kitano:

Real-time Multiple Person Tracking by Face Recognition and Active Audition.

SIG-Challenge-01-5, pp.27-34, JSAI, Mar. 2001. in Japanese

- Hiroshi G. Okuno, Kazuhiro Nakadai, Lourens, T., Hiroaki Kitano:

Sound and Visual Tracking for Humanoid,

Proc. of 2000 International Conference on Information Society in the 21st Century: Emerging Technologies and New Challenges (IS2000),

254--261, Aizu-Wakamatsu, Nov. 2000.

- Kazuhiro Nakadai, Tatsuya Matsui, Hiroshi G. Okuno, Hiroaki Kitano:

Active Audition System and Humanoid Exterior Design.

Proc. of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-2000), 1453--1461, Takamatsu, Nov. 2000.

- Hiroaki Kitano, Hiroshi G. Okuno, Kazuhiro Nakadai, Theo Sabische, Tatsuya Matsui, Design and Architecture of SIG the Humanoid:

An Experiemntal Platformfor Integratind Perception in RoboCup Humanoid Challenge.

Proc. of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-2000), 181--190, Takamatsu, Nov. 2000.

- Iris Fermin, Hiroshi Ishiguro, Hiroshi G. Okuno, Hiroaki Kitano:

A Framework for Integrating Sensory Information in a Humanoid Robot.

Proc. of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-2000), 1748--1753, Takamatsu, Nov. 2000.

- Kazuhiro Nakadai, Tino Lourens, Hiroshi G. Okuno, Hiroaki Kitano. Humanoid Active Audition System. Proc. of First IEEE-RAS International Conference on Humanoid Robots (Humanoids2000), Cambridge, Sep. 2000.

- Lourens, T., Kazuhiro Nakadai, Hiroshi G. Okuno, Hiroaki Kitano:

Selective Attention by Integration of Vision and Audition.

Proc. of First IEEE-RAS International Conference on Humanoid Robots (Humanoids2000), Cambridge, Sep. 2000.

- Kazuhiro Nakadai, Lourens, T., Hiroshi G. Okuno, Hiroaki Kitano:

Humanoid Active Audition System Improved by The Cover Acoustics.

In Mizoguchi, R. and Slaney, J. (eds) PRICAI-2000 Topics in Artificial Intelligence (Sixth Pacific Rim International Conference on Artificial Intelligence), 544--554, Lecture Notes in Artificial Intelligence No. 1886, Springer-Verlag, Melborne, Aug. 2000.

- Ian Frank, Kumiko Ishii-Tanaka, Hiroshi G. Okuno, Kazuhiro Nakadai, Yukiko Nakagawa, K. Maeda, Hiroaki Kitano:

And The Fans are Going Wild! SIG plus MIKE.

Proc. of the Fourth Workshop on RoboCup (RoboCup-2000), 267--276, RoboCup, Melbourne, Aug. 2000.

- Kazuhiro Nakadai, Lourens, T., Hiroshi G. Okuno, Hiroaki Kitano:

Active Audition for Humanoid.

Proc. of the Seventeenth National Conference on Artificial Intelligence (AAAI-2000), 832-839, Austin, Aug. 2000.

Award

- IS-2000 Best Paper Award (Oct. 2000)

- JSAI Research Promotion Award (May 2001)

- International Society of Applied Intelligence, IEA/AIE-2001

Best Paper Award (1st Prize) (Jun. 2001)

- International Conference on Intellignet Robots and Systems (IROS 2001) BEST PAPER Nomination Finalist (Oct. 2002)

- SI 2002 Best Session Award (Dec. 2002)

Media

People

- Hiroaki Kitano

- Project Director

- Hiroshi

G. Okuno

- Professor, Graduate School of Informatics, Kyoto University

- Kazuhiro Nakadai

- Reseacher

- Tatsuya Matsui

- Former member, currently with Flower Robotics Inc.

- Ken-ichi Hidai

- Former member, currently with Sony Corp.

- Tino Lourens

- Former member, currently with GMD

back to project home

Å@